Will Technology be the Downfall of Mankind? The Theory of Singularity

History is rife with end of the world predictions. Over centuries, as mankind has questioned its origins, it has also wondered if and when life, as we know it, will end. Believe it or not, mankind is very unlikely to be destroyed by zombies, but the theory of singularity is very realistically at the forefront of every futuristic discussion about the fate of mankind in relation to technological development. But what is a Singularity?

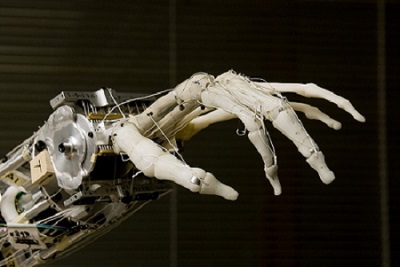

Robot Hand by University of Washington Office of News and Information

What exactly is Technological Singularity?

Technological singularity refers to a theoretical point in time at which true artificial intelligence (AI) is created. True AI is any robotic or computer system that is not only capable of recursively improving and redesigning itself, but is also able to design AI systems that are better than itself. These newly created AI systems in turn design systems that are even more intelligent, resulting in the continuous cycle of the creation of increasingly intelligent computer systems.

At some point within this cycle, it is expected that computer systems will be created whose intelligence far surpass any human intellect. This theory states that in fact, the level of intellect of these AI systems will become unfathomable to the human mind. At this point arises the danger that such AI becomes incredibly unpredictable. If its goals have evolved to conflict with the goals and morality of humanity, there is little chance of controlling such superintelligence.

Sci-Fi or Real-Life? Who believes this theory?

Indeed, it all begins to sound like the premise of a very promising sci-fi thriller. Bringing a bit of realism to this theory is the seriousness with which it has been discussed by several leading scientists. Well-known theoretical physicist Stephen Hawking was recently quite vocal on the matter of singularity and he is of the opinion that advances in the field of AI might bring on an apocalypse. In his 2014 interview with the British Broadcasting Corporation (BBC) he said, “The development of full artificial intelligence could spell the end of the human race.”

Hawking’s views closely mirror the views of Mathematician Vernor Vinge, the very person who coined the term ‘Technological Singularity’ in 1982. Vernor Vinge in his 1993 essay, “The Coming Technological Singularity: How to Survive in the Post-Human Era” goes as far as stating that in thirty years from 1993, there will exist the technological means to create superhuman intelligence and shortly after this, the human era will end.

Fractal Spirit by Rudolf Getel

Who’s on the other side?

Right on the flip side of this debate are other futurists like robotics pioneer Rodney Brooks who is firmly of the opinion that malevolent AI of such overwhelming superintelligence are nothing to worry about for the next few hundred years at least.

So, what are the main arguments?

According to Brooks, the main problem with the idea of AI soon becoming powerful enough to destroy mankind is the huge gap between the recent advances in specific aspects of AI and the actual complexity involved in creating a sentient volitional intelligence. From his viewpoint, this complexity has been largely underestimated while our (albeit impressive) advances in technology have been overestimated.

Today, it is an impressive feat for a computer to be able to recognize an image as that of a dog. However, regardless of how accurately a computer can sort a set of images into animal types, it is still impossible to say that this computer understands innately what a cat or a dog is. How do you begin to teach a machine insight? How do you teach it sentience? Brooks makes the argument that even if the AI that we create now (or in the next few decades) are able to create other intelligent machines, there is still no guarantee that these machines will have the volition and the sentience required to take over the world.

Proponents of Technological Singularity might try to challenge Brooks’ points by bringing into account the rate of development of technology so far. Using the law of accelerating returns, futurist Ray Kurzweil explains why he expects singularity to occur as soon as 2045. The law states that fundamental measures of information technology follow predictable and exponential trajectories. According to him, humans find it difficult to believe just how fast technology will continue to develop because we live linear lives and technology is exponential.

He writes, “As exponential growth continues to accelerate into the first half of the twenty-first century, it will appear to explode into infinity, at least from the limited and linear perspective of contemporary humans.” One can easily make the assumption that Kurzweil expects the world to go from being impressed by a computer recognizing a dog to expecting (and getting) much bigger and better results very quickly; maybe even sentience.

Different people see different singularity end points

One big difference between the thought processes of Ray Kurzweil and earlier discussed Stephen Hawking is that Ray Kurzweil does not expect superintelligent AI to murder us all in our sleep. Instead, he expects that humanity will be ushered into a new era in which machines will have the capacity and the insight to solve problems like world hunger, nanotechnology, pollution and space exploration. This superintelligence will then extend towards improving human conditions in all ramifications and ultimately making man immortal! This is perhaps the most optimistic end product of singularity.

Employing safeguards

When it has all been said and done, the only thing that remains to note is that the year is 2015 and, as far as we know, nobody owns a time machine yet. There is no way to know what will happen in 2045. With the zeal that companies like Facebook and Google seek to create more intelligent machines, there is no doubt that development in the field of AI is far from over.

However, a lot of believers in the theory of Singularity ask that while this technology is developed, we at least consider all the potential risks that come with it. Nick Bostrom, a philosopher at the University of Oxford, put it succinctly when he wrote, “if you want unlimited intelligence, you’d better figure out how to align computers with human needs.” Perhaps it is time to start figuring out how to program human values and morality into our machines.

Fiyinfoluwa Soyoye is a Millennium Youth Camp alumna studying Computer Science and Mathematics at the University of Helsinki. She is interested in robotics, science-fiction and music

Fiyinfoluwa Soyoye is a Millennium Youth Camp alumna studying Computer Science and Mathematics at the University of Helsinki. She is interested in robotics, science-fiction and music